With the advent of AI, we are only beginning to envision how dramatically it will change the management of our health.

Many people have already used Claude, Gemini or ChatGPT to evaluate symptoms or interpret lab results. As models, apps, and users evolve, the possibilities to alter how care is delivered, accessed, and financed seem limitless.

From Carbon to Silicon

To fully grasp how AI might change healthcare, we first need to understand how our current system — built around humans and analog methods — limits our potential. Our health system was constructed on the assumption that information is stored locally in proprietary archives (on paper or in locally controlled EHRs), and that knowledge is stored in the minds and hands of trained providers.

In 1999, The Institute of Medicine showed that more than 100,000 people were dying annually due to medical errors. Since then, clinicians and administrators have sought to resolve flaws in our system. With AI, we may be able to rethink care delivery to resolve these problems, freeing us from the bottlenecks of a limited human supply of trained clinicians. The architects of hospitals and insurance companies never imagined that silicon could transcend carbon, yet the early days of generative AI make it obvious that this technology will transform the way care is delivered. AI-related tools can play an important role in reducing patient harm, while making our system more affordable and accessible.

Overcoming the Limits of Imagination

With new technologies, there is often a failure of imagination; society has a collective inability to see how the world can be reshaped by breakthrough innovations. This can be because new technologies are immature and the infrastructure to scale them does not yet exist. It is also due to humanity needing time to understand how to take advantage of new things. As Chris Dixon has commented, when the film camera was invented, creators needed a long time to figure out you could do more than just film a staged play indoors. In healthcare, changes in the way physicians practice often require the retirement of one cohort of doctors and the rise of a new generation of providers, who are more comfortable with newer tools (as was seen with the adoption of less invasive, laparoscopic surgery).

Adaptation Requires Creative Destruction

Beyond the limits of imagination, administrators face practical barriers to change. How can one be sure it is safe, legal, or appropriate to use new AI technologies? If providers automate current processes, such as replacing in-person care with an AI-enabled app or digital therapeutic, how can they backfill lost revenue associated with redundant activities? Will the data AI depends on be available and exchangeable without sacrificing privacy and security?

Our healthcare system isn’t designed with accessible data as a core assumption. More than a pragmatic challenge, this is a business model issue: large health systems would prefer that you never leave their four walls (which they call “patient leakage”), because they operate as oligopolies, leveraging market power to get better rates from payers. And yet, our health journeys take us outside of those four walls, where our data is no longer available, integrated, or complete.

For the modern potential of AI to truly advantage everyone, there needs to be a new architecture for data that enables this future. Truly ubiquitous data will make services more readily available and of higher quality, while also enabling a marketplace of care solutions that are not held back by fear of data blocking. If data integration were simple, patients (and their primary care providers and navigators) could evaluate cost vs. quality and the forces of creative destruction would reshape services, lowering costs.

The Four AI Agents of Your Health

In an AI-enabled future powered by a new data architecture, four “agents” are likely to emerge, who will work together to improve our health – four different objective functions that will be part of each patient’s life in an efficient and effective health care future. This framework originates from a straightforward concept that one must analyze the state of events and then orchestrate actions based on that understanding. This was stated memorably by Jay Desai, who would tell his team to “figure it out” and “get it done.” Jay’s charge is a great way to organize the work of managing health: figure it out, and get it done.

In terms of “figuring it out,” two agents will be key.

Moving from the realm of “figuring it out” to “getting it done,” two other agents become important in your life.

Four Bots for the Win

One might note that the four bots could, in fact, be one larger AI system operating cohesively, and this is likely. The reason it’s helpful to separate these four agents initially is to emphasize that these are four different objective functions, which work together. The Archivist aims to ensure the record is always complete. Whenever new items enter the archive, this is a prompt for The Diagnostician to update the risk assessment, just as new medical knowledge continues to improve its foundation model. An updated risk assessment could then prompt The Planner to adjust the health plan for a given patient. In turn, a change in the plan will require The Guide to update the user accordingly. The sequence of tasks could go the other way as well. Perhaps The Guide is told by the user that a new bout of scalp pain is occurring, prompting it to alert the other three bots and make appropriate adjustments. Perhaps The Diagnostician calls next for a temporal ultrasonic study to sort things out, which would then need to be incorporated by The Planner into the user’s life, considering the user’s insurance status, physician networks, schedule, etc., all explained to the user by The Guide.

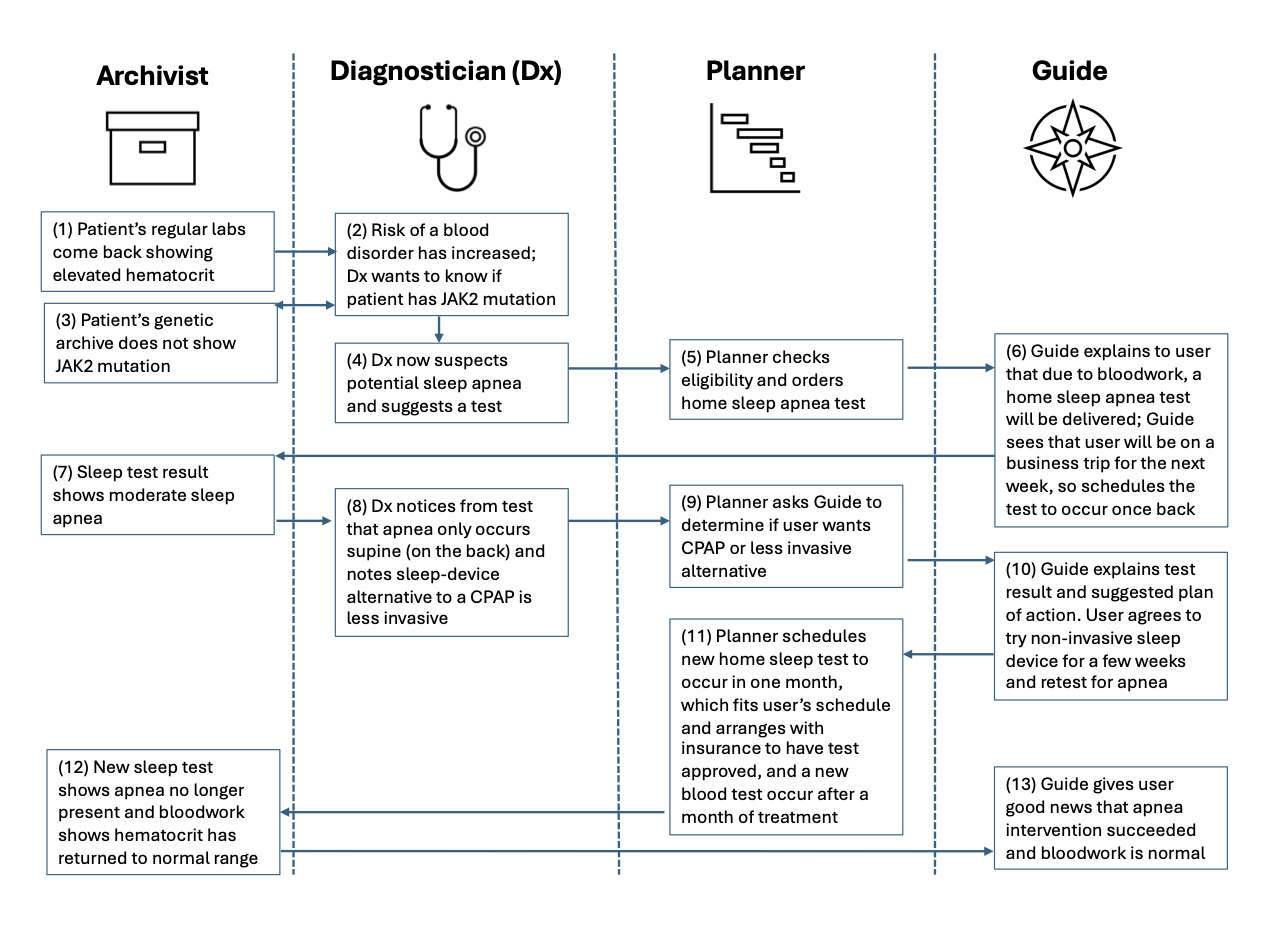

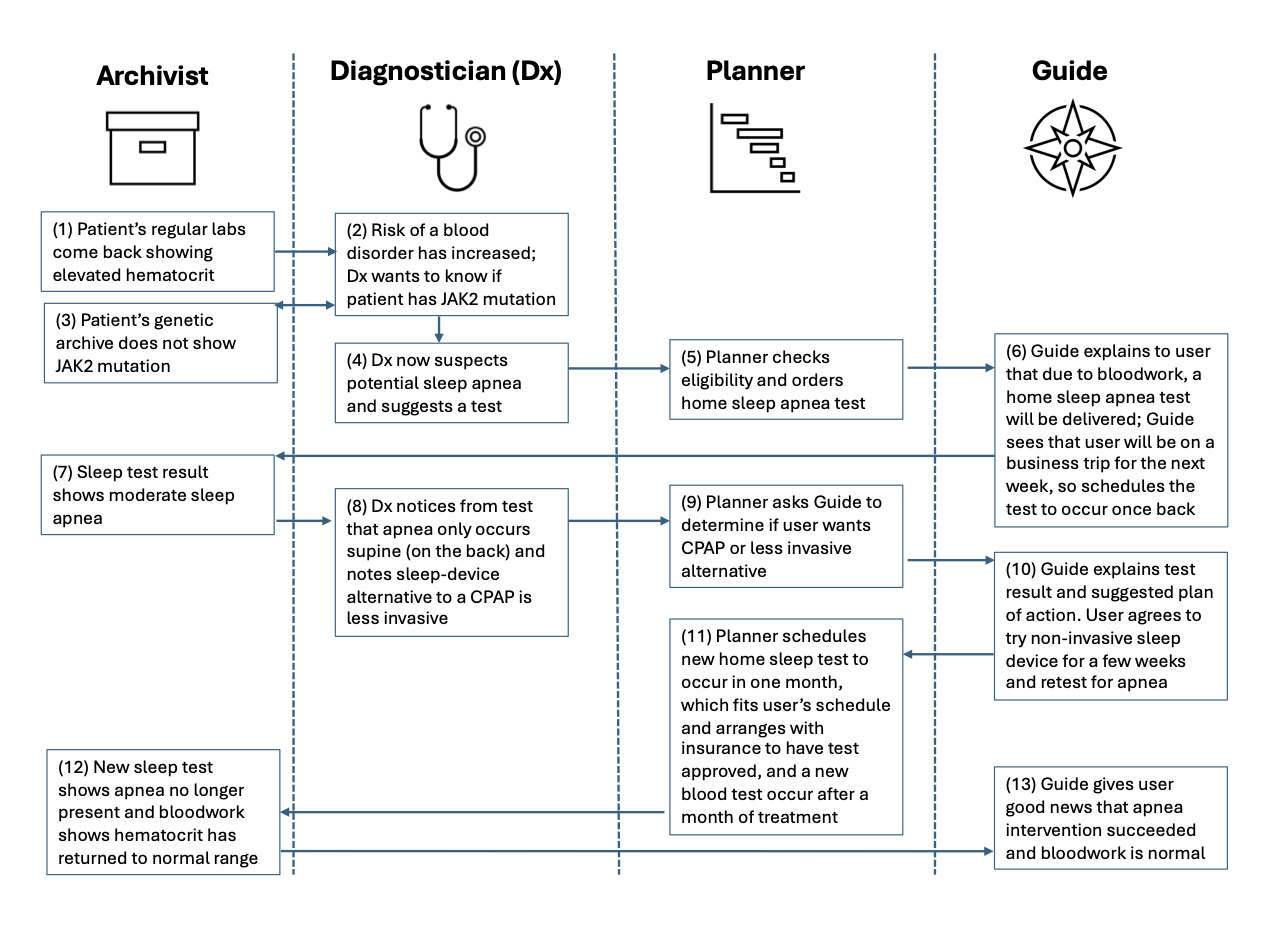

Example: Hematocrit Result Leads to Sleep Apnea Treatment

To show these agents in action, here’s how a real-world scenario might work, with the four agents collaborating to get to the bottom of an abnormal lab value.

This hypothetical example may or may not relate to how the author of this post spent the last two months figuring out a similar issue (thanks to the care team at Firefly). An agentic future would make this sequence even more seamless, ensuring that the archive, the risk assessment, the health plan, and the user’s mindset are cared for at every step of the journey, possibly without the need for human intervention. Importantly, an AI-driven model would remove much – if not all – of the human costs, liberating the model from process bottlenecks and resource constraints.

Innovation as the Path to this Future

This sci-fi vision of the future may be closer than one might think. Companies like Zus and Health Gorilla already handle much of the tasks of an Archivist, as does Apple Health to a large degree for consumers (for entities that are properly linked). The Diagnostician already exists in the form of Google’s MedPalm models, and its AI rivals are not far behind. One already can upload an archive to these models to seek medical analysis. The Planner has further to go, but the venture market is replete with pitch decks of companies aiming to deploy agents to take over every administrative task in healthcare from answering the phone (e.g., Clarion or Hyro), to preauthorizing care (e.g. Cohere), to handling the billing (e.g. Akasa).

If doctors can have a bots call patients to remind them of appointments, why can’t patients have bots take the call and schedule care for when its suits their busy lives (in sync with health plan requirements)? As for The Guide, this may be the most Black Mirror-esque component, but most people would benefit from an always-on agent like Scarlett Johansson in Her, who simply takes care of everything. The ChatGPT voice is along those lines; just imagine the power of that interface when linked to the other three agents, entities who can actually “figure it out” and “get it done.” It’s not too hard to imagine Apple rolling out an “Apple Health+”* service that leverages AI and the iPhone’s health archive to assess risks and help users get care; other wearables such as Oura Ring may also go down a similar path.

Linking Back to our Terrestrial System of Care

How this AI future will integrate with our existing “terrestrial health system” remains an open question. And by “terrestrial,” I’m referring to all the buildings, offices, devices, labs, imaging centers, insurance companies, pharmacies, and HR departments that make up more than 18% of U.S. GDP. Those organizations already have a head start adopting AI tools to make their work more efficient, effective, and scalable, aided by countless health tech startups and established companies. As noted in a prior post on The New Digital Care Architecture, in order for data to take a full seat at the table alongside doctors, drugs, and diagnostics, a myriad of technologies and processes need to evolve, including advances in both AI and the interoperability enabled by APIs.

These Four Agents represent something more personal – a model by which each person can have guardian angels in the cloud, always thinking about their health, meeting them where they are, to get them the care they require; anticipating what they need next often before they realize it. The implications for how personalized agents would extend from, interact with, and refine our existing health system remain unclear and are an area ripe for innovation. Though much of the “how” in this vision is unclear, what is clear is that the advance of AI agents will make much of care radically cheaper, more accessible, and more convenient.

So What?

In the coming months, more of the “so what” will be explored, aiming to identify actionable projects that can bring about this much more active, effective, scalable, and affordable healthcare future. If we can create real markets for care, where providers and individuals manage budgets to optimize health in tune with the personal needs of users, creative destruction will be unleashed to reshape our system for the better.

Of course, manifold risks must be managed and dealt with as we build a new healthcare future, rooted in AI systems such as these. That’s why we need entrepreneurs, clinicians, regulators, and operators to collaborate in the pursuit of “doing well by doing good.” for the sake of us all. If venture investors can help pull this future forward faster by financing the work, all the better.